Physiology News Magazine

Granny’s going global…

Investigating the organisational and functional principles operating within the social brain involves establishing causative links between behaviour and multi-component neural systems. With the introduction of new techniques for monitoring brain activity, are we now advancing towards an understanding of how brain systems mediate social cognition? Alister Nicol and colleagues look at current strategies and some of the challenges lying ahead

Features

Granny’s going global…

Investigating the organisational and functional principles operating within the social brain involves establishing causative links between behaviour and multi-component neural systems. With the introduction of new techniques for monitoring brain activity, are we now advancing towards an understanding of how brain systems mediate social cognition? Alister Nicol and colleagues look at current strategies and some of the challenges lying ahead

Features

Alister U Nicol, Hanno Fischer, Andrew J Tate, & Keith M Kendrick

Laboratory of Behavioural & Cognitive Neuroscience, The Babraham Institute, Cambridge, UK

https://doi.org/10.36866/pn.63.32

Progressing in our career, finding a partner, leading a social life – living in a modern society is a complicated business. It is all about ‘how to play the game’, which ‘pack to join’, whom to interact with and how, in order to succeed. We are well equipped for this task: our brains possess specialised neural systems for perception, processing, assessing and memorising the vast amount of sensory information related to individual identity, emotional state and attraction, the interpretation of which guides our social behaviour. These neural systems are not unique to us humans and have been demonstrated in many social mammals including monkeys, ungulates, and rodents (Tate et al. in press).

Many species use visual cues to identify one another and communicate social signals, with the face playing an important part in human and primate societies. Others, like rodents, use odour cues for individual recognition. While the sensory receptors in our eyes and noses are exquisitely sensitive in detecting and conveying visual and odour cues from other individuals to our brain, it is the brain which must decode the constant stream of information, interpret its relevant features, and memorise and recall them in a wide range of different social contexts.

So how do our brains achieve this? And how do we find out?

In humans, non-invasive techniques for monitoring brain activity such as fMRI, MEG and EEG, in the context of individuals carrying out visual or olfactory recognition tasks, have revealed the topography and global organisational aspects of the underlying brain systems. However, interactions amongst individual neurons within the neuronal networks involved have yet to be revealed.

Our current understanding of encoding strategies within such networks has been determined from the responses of individually recorded neurons. One major finding is that brains may encode complex visual stimuli, such as complete faces (e.g. Young & Yamane, 1992; Sugase et al. 1999) or an individual’s specific odour (e.g. Kendrick et al. 1992), using very few neurons – ‘Grandmother neurons’, named after a hypothetical cell encoding Granny. However, grandmother neuron schemes (also known as ‘sparse codes’ or ‘local encoding’) are not the only strategy brains use to encode complex stimuli: for example, odours (often mixtures with hundreds of components) are globally represented across a cell population, each cell responding in its own way with greater or lesser reproducibility (Laurent, 2002). The neural code for Granny’s face or smell, say Chanel No 5, is thereby held in the cross-cell activity patterns of the neuronal population (known as population or global encoding). Theoretically, the number of stimuli that can be encoded by an ensemble of neurons using population encoding increases exponentially with ensemble size, and so is much greater than using grandmother cells, in which the number of stimuli encoded increases only linearly with population size (Rolls et al. 1997).

However, the process of perception is only one side of the problem. Our current knowledge on how brains

‘streamline’ the influx of sensory information and extract socially important features such as identity and emotional state of individuals postperception is still inconsistent (Calder & Young, 2005). Are high-capacity encoding schemes involving entire networks of neurons used during the initial process of streamlining, whereas grandmother schemes represent a low capacity but highly concise top-level code for memorising and recalling?

The biggest obstacle in answering these questions is that most brains consist of billions of cells (e.g. an estimated 50 billion in humans) – too many to be explored one by one. The output of any neuronal network is defined not only by the properties of its cells but even more by the interactions between those cells. Addressing network function, therefore, requires simultaneously monitoring the activities of many cells. However, most of the responsive neurons identified so far are scattered across rather large areas of the brain.

Over the last decade, many laboratories (ours included) have invested considerable time in developing techniques to monitor the activity of large ensembles of individual neurons, e.g. using real-time calcium imaging in transgenic mice. Another more widely used approach is multi-electrode array (MEA) electrophysiology (e.g. Nicolelis & Ribero, 2002). Depending on the array size (in our lab we use arrays of up to 128 electrodes), MEA techniques allow simultaneous sampling from several hundred neurons across a relatively wide area of brain tissue (several mm2). Data capture rates are large; in our laboratory, as much as 100Mb min-1. If implanted chronically, recordings can be made over a period of several months.

In general, the large volume of data requires that most labs develop data processing techniques including algorithms for discriminating the activities of individual neurons (each channel of MEA data may contain the activity of several neurons) and methods for statistical analyses (e.g. Horton et al. 2005) in order to determine the spatial and temporal relationships between them, and so decipher the neuronal code.

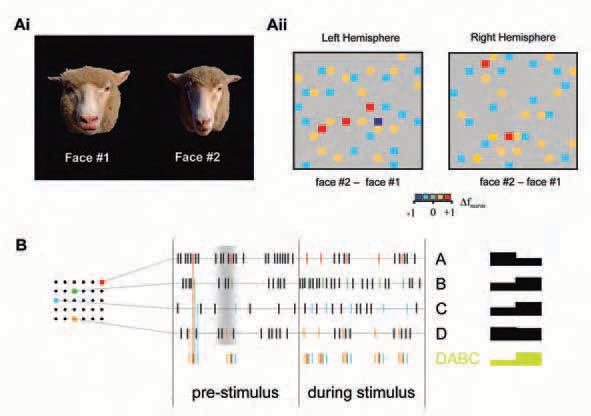

Our current research focuses on (1) the contribution of individual cells in an ensemble to the overall ensemble pattern of response to defined visual stimuli such as faces, facial expressions or odours. This includes overall array differences to analyse the reliability of a representation across a recorded ensemble of neurons and the amount of overlap between individual representations; (2) activity ‘hot-spots’, i.e. areas of neurons in close proximity changing their activity in response to stimulus presentation (Fig. 1), and alterations in the ‘temperature’ and location of these areas through learning and memory; (3) correlation analyses to explore whether global stimulus properties, such as identity or expression, are reflected by the degree of synchronization between neurons;(4) stimulus encoding in spatiotemporal sequences of neuronal activity, i.e. ‘patterns-within-patterns’ (Fig. 2).

What do we gain?

Many current hypotheses regarding network function underlying sensory encoding can only be tested by recording the activities of many neurons simultaneously. Furthermore, computer simulations (invaluable in understanding complex systems such as brains), inevitably rely upon sufficient supporting biological data that are representative of the systems studied. Equally important, cognitive brain research often requires highly trained animals. By maximising the data obtained from each animal, we reduce the number of animals needed – a duty of any bioscientist.

In summary, MEA techniques alone do not guarantee successfully understanding the brain, nor do they make other techniques obsolete. They are a promising supplement aiming to bridge the gap between imaging and single-cell studies. The brain has a century old track record of encrypting its functional principles. A combined strategy incorporating behavioural, imaging, single cell and MEA techniques will play the central part in deciphering the code. The rest is down to the ultimate tool – our brain. After all, it takes a thief to catch a thief!

Acknowledgements

Research in our laboratory is funded by the BBSRC.

References

Calder AJ & Young AW (2005). Understanding the recognition of facial identity and facial expression. Nat Rev Neurosci 6, 641-651.

Horton PM, Bonny L, Nicol AU, Kendrick KM & Feng JF (2005). Applications of multi-variate anaylisis of variance (MANOVA) to multielectrode array electrophysiology data. J Neurosci Methods 146, 2241.

Kendrick KM, Levy F & Keverne EB (1992). Changes in the sensory processing of olfactory signlas induced by birth in sheep. Science 256, 833-836.

Nicolelis MAL & Ribeiro S (2002). Multielectrode recordings: the next steps. Curr Opin Neurobiol 12, 602-606.

Laurent G (2002) Olfactory network dynamics and the coding of multidimensional signals. Nat Rev Neurosci 3, 884-895.

Tate AJ, Fischer H, Leigh AE & Kendrick KM (2006). Behavioural and neurophysiological evidence for face identity and face emotion processing in animals. Phil Trans R Soc Lond B (in press).

Rolls ET, Treves A & Tovee MJ (1997). The representational capacity of the distributed encoding of information provided by populations of neurons in primate temporal visual cortex. Exp Brain Res 114, 149162.

Sugase Y, Yamane S, Ueno S & Kawano K (1999). Global and fine information coded by single neurons in the temporal visual cortex. Nature 400, 869-73.

Young MP & Yamane S (1992). Sparse population coding of faces in the inferotemporal cortex. Science 256, 1327-1331.