Physiology News Magazine

Echolocation in people

Humans can learn how to use echolocation, aiding the mobility, independence and wellbeing for people who are partially sighted or blind

Features

Echolocation in people

Humans can learn how to use echolocation, aiding the mobility, independence and wellbeing for people who are partially sighted or blind

Features

https://doi.org/10.36866/pn.126.20

Dr Lore Thaler

Associate Professor, Department of Psychology, Durham University, UK

One day in 2009 (when I was a postdoc at Western University in Canada), I came across reports of people who were totally blind and could do things such as riding their bike, playing basketball and hiking using echolocation. Together with my postdoc advisor Mel Goodale I watched video clips about remarkable people like Daniel Kish and Juan Ruiz, nicknamed Human Bats. Juan Ruiz and Daniel Kish are blind and exceptionally skilled at echolocation to the degree that they go mountain biking or can tell what objects are without touching them. They also teach others (Fig.1). This was when I started working on human echolocation. Today in my lab we investigate human echolocation as a topic in its own right, and use it to understand how the human brain adapts to learning new skills.

How do humans use echolocation and how does this differ from other animals?

Bats and dolphins are well known for their ability to use echolocation. They emit bursts of sounds and listen to the echoes that bounce back to perceive their environment. Human echolocation uses the same technique. It relies on an initial audible emission, and subsequent reflection of sound from the environment. When people echolocate, they make audible emissions like mouth clicks, finger snaps, whistling, cane taps, or footsteps. These are all in the audible spectrum, as opposed to the ultrasound emissions that bats or dolphins use. Even though every person, blind or sighted, can learn how to echolocate, to date the most skilled human echolocators are blind (Kolarik et al., 2014; 2021).

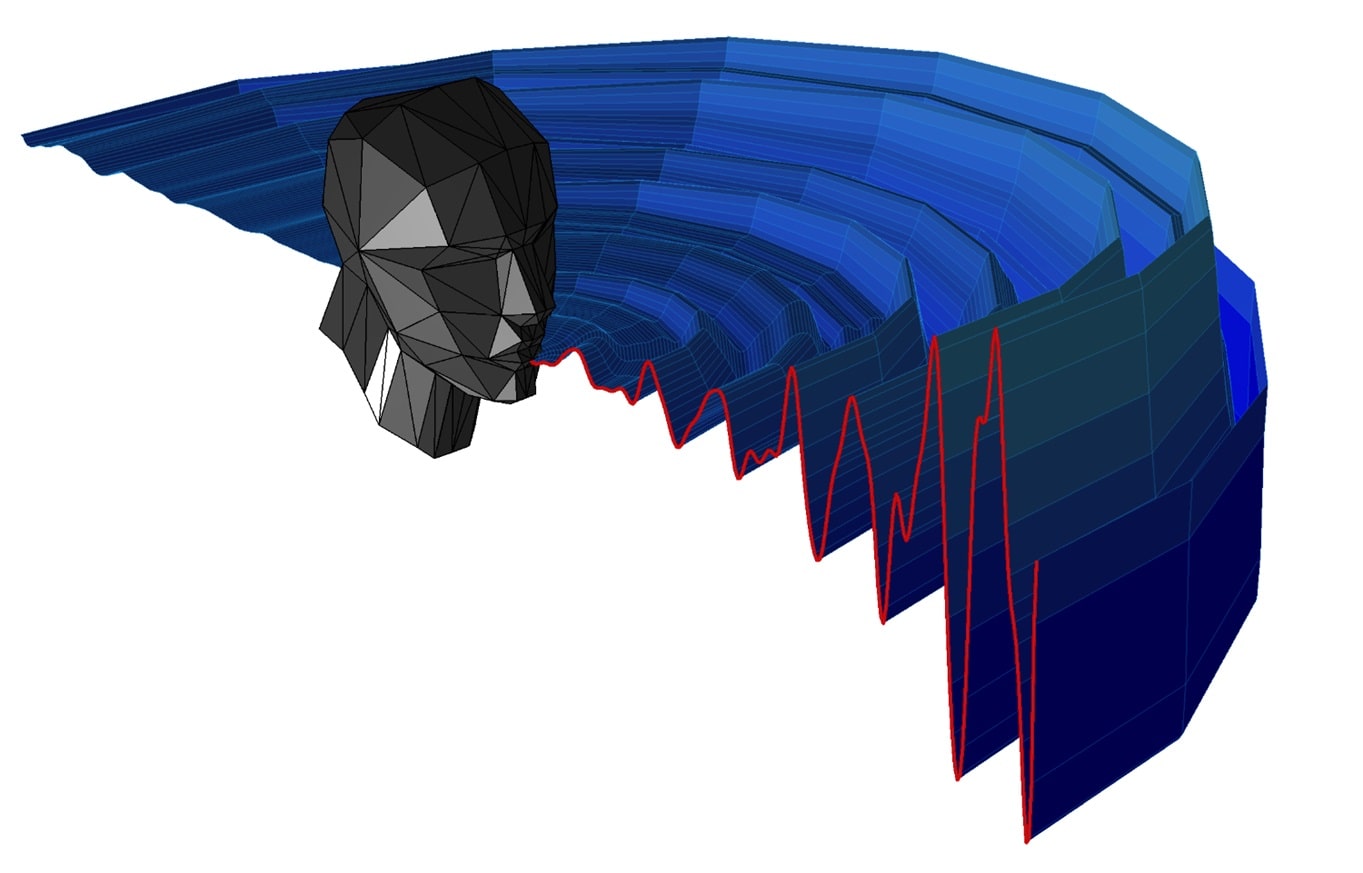

The emissions that proficient echolocators prefer to use are mouth clicks. In our work we have measured thousands of these clicks (see Fig.2), and have found that they are very brief (~5 ms), and that the beam of sound spreads out in a way that I like to refer to as a “beam of an acoustic flashlight” (Thaler et al., 2017) (see Fig.3). We have also found that people adjust clicks dynamically. For example, people will make more clicks or louder clicks when the echo is comparably weaker (Thaler et al., 2018) or to compensate for interfering noises (Castillo-Serrano et al., 2020). This dynamic nature is likely to be important when using echolocation out and about.

We also use motion capture to investigate how echolocation is related to body movement, for example during walking. The motion capture technology we use is the same used to create CGI movies such as Avatar or The Polar Express. Reflective markers are placed on a person’s body, and movements of these markers are captured with special cameras (see Fig.4). Using this technology, we found that echolocation can support walking in a similar way to vision (Thaler et al., 2020). We found that people who are blind and who have experience using echolocation walk just as fast as people using vision. They also have walking paths that are very similar to sighted people using vision, for example when navigating around obstacles.

Understanding brain activity related to echoes

We are also interested in the brain basis for echolocation in people. Neuroimaging methods such as functional magnetic resonance imaging (fMRI) are suitable for investigating this. One of the problems we face when using fMRI to investigate echolocation is the scanner is a very confined space, with not enough room to actually echolocate. In fact, during scanning, the participant lies inside a narrow tube that is only 60 cm in diameter, and the inside of that tube is about 10 cm from your eyes or mouth, so really very close. This can be overcome by using “virtual” echo acoustic spaces.

In my lab we make use of passive listening scenarios. To do this, we first make recordings inside the ears of a participant whilst they are echolocating scenarios outside of the scanner. Subsequently, inside the scanner, these scenarios are recreated by playing the recordings back using special in-ear headphones. Other labs have overcome this issue by recording the clicks that people make inside the scanner, and then processing them to create a virtual scene, which is played back to the participant via headphones. The advantage of this technique is that the participant can make their own emissions during scanning. Further to this, we also use special scanning sequences, so that the MRI scanner is silent whilst the participant is listening to the echolocation sounds. If we used regular scanning sequences, it would be too noisy for the participant to hear anything.

Which parts of the brain are activated?

Studies using these techniques have shown that people who are blind and skilled in echolocation use not only the hearing part of their brain to process echoes, but that they also use those parts of the brain that process vision in sighted people (Thaler et al., 2011; Wallmeier et al., 2015). In people who are normally sighted, early visual cortical areas, such as primary visual cortex, are activated by visual stimulation in a specific pattern that is referred to as retinotopy. We have found that in blind echolocators, the primary visual cortex is activated by acoustic stimulation in a specific pattern that resembles retinotopy (Norman and Thaler, 2019) (See Fig.5). Our results suggest that “retinotopic” activity can also be driven by sound, and that this is facilitated by experience with echolocation. This result challenges our classical understanding of the organisation of brain function by sensory modality and opens other ways of understanding the human senses.

Understanding the brain’s ability to adapt and learn new skills

Current research in my lab is investigating how these changes arise in the human brain. The findings will be useful for learning about the inherent ability (or limitations) of the human brain to adapt to learning new skills. Echolocation is a perfect paradigm for measuring these changes because people start from scratch (unless they already have experience in echolocation), so that there is a good baseline from which change can be measured. It also provides large scope for improvement. This will also be useful for determining rehabilitative effects of training for people who are blind. Specifically, we can track change over time and compare effects before and after training, both on the behavioural and the brain level.

The benefits of click-based echolocation for people who are visually impaired or blind

Echolocation is a learnable skill that can be acquired by people who are blind as well as by people who are sighted. In a recent study we investigated if training in click-based echolocation leads to meaningful benefits for people who are blind (Norman et al., 2021).In our study we trained people who were sighted, and people who were blind (aged 21–79 years) over the course of 10 weeks. People were trained to use their own mouth clicks to determine the size, location and orientation of objects placed in front of them at various distances. They were also trained in a computer-based echolocation task, where they used buttons on a computer keyboard to navigate their way around a set of corridors using echolocation sounds that they heard over headphones. Everyone improved their echolocation skills, i.e. accuracy or speed of responses in these various tasks got better.

Importantly, neither age nor blindness was a limiting factor in participants’ rate of learning (i.e. their change in performance from the first to the final session) or in their ability to apply their echolocation skills to new, untrained tasks. Three months after training was completed, we found that all blind participants reported improved mobility, and 83% of blind participants also reported improved wellbeing and independence in their daily lives. All blind participants in this study were independent travellers, and had mobility skills (See Fig.6) before taking part in the study (e.g. long cane or guide-dog users). Thus, any benefits of click-based echolocation we observed were in addition to those preexisting skills. The results from this work suggest that echolocation might be a useful skill for people who are blind, and that even 10 weeks training can lead to measurable benefits in terms of mobility, independence and wellbeing. The fact that the ability to learn click-based echolocation was not strongly limited by age or level of vision has positive implications for the rehabilitation of people with vision loss or in the early stages of progressive vision loss.

Ongoing and future work in my lab will continue investigating issues relating to human echolocation and using echolocation as a paradigm to better understand the human brain and cognition. For example, we would like to use echolocation to learn more about the brain’s ability to adapt to change as a function of age. For example, how do children acquire echolocation skills, and how does this compare to adults and how is this related to sensory loss? Better understanding of such issues might also have potential applications for timing of rehabilitative interventions for children and young people.

References

Castillo-Serrano JG et al. (2021). Increased emission intensity can compensate for the presence of noise in human click-based echolocation. Scientific Reports 11(1), 1-11. http://doi.org/10.1038/s41598-021-81220-9.

Kolarik AJ et al. (2014). A summary of research investigating echolocation abilities of blind and sighted humans. Hearing Research 310, 60-68. https://doi.org/10.1016/j.heares.2014.01.010.

Kolarik AJ et al. (2021). A framework to account for the effects of visual loss on human auditory abilities. Psychological Review, 128(5), 913. https://doi.org/10.1037/rev0000279.

Norman L, Thaler L (2019). Retinotopic-like maps of spatial sound in primary ‘visual’ cortex of blind human echolocators. Proceedings of the Royal Society: Series B Biological Sciences 286(1912), 20191910. https://doi.org/10.1098/rspb.2019.1910.

Norman L et al. (2021). Human click-based echolocation: Effects of blindness and age, and real-life implications in a 10-week training program. PLoS ONE 16(6), e0252330. https://doi.org/10.1371/journal.pone.0252330.

Thaler L et al. (2011). Neural correlates of natural human echolocation in early and late blind echolocation experts. PLoS ONE 6(5): e20162. https://doi.org/10.1371/journal.pone.0020162.

Thaler L et al. (2017). Mouth-clicks used by blind human echolocators – Signal description and model based signal synthesis. PLoS Computational Biology 13(8): e1005670. https://doi.org/10.1371/journal.pcbi.1005670.

Thaler L et al. (2018). Human echolocators adjust loudness and number of clicks for detection of reflectors at various azimuth angles. Proceedings of the Royal Society: Series B Biological Sciences. 285(1873),20172735. https://doi.org/10.1098/rspb.2017.2735.

Thaler L et al. (2020). The flexible Action System: Click-based echolocation may replace certain visual Functionality for adaptive Walking. Journal of

Experimental Psychology: Human Perception and Performance 46(1), 21-35. https://doi.org/10.1037/xhp0000697.

Wallmeier L et al. (2015). Aural localization of silent objects by active human biosonar: Neural representations of virtual echo-acoustic space. European Journal of Neuroscience, 41(5), 533-545. https://doi.org/10.1111/ejn.12843.