Virtual reality (VR) setups are a promising tool for neurorehabilitation. However, most existing VR therapeutic protocols offer environments exclusively relying on vision, without rendering haptic feedback to users for their interactions with virtual objects. Haptic feedback, including tactile and proprioceptive inputs, is fundamental for sensorimotor function and in particular for skilled hand-object interactions (see Wolpert and Flanagan, 2001). In addition, emerging evidence indicates that the incorporation of multisensory stimuli augments virtual reality experience (Melo et al., 2022) and promotes neuroplasticity (Laver et al., 2017). Here we investigated whether the presence of haptics during hand-object interactions in VR can modulate corticospinal excitability (CSE) at point of object contact.

The experimental design comprised four combinations of sensory feedback conditions: (1) vision only, (2) vision combined with force feedback rendered by a haptic robot, (3) vision combined with force feedback rendered by a haptic robot, and with the actual touch of a real object, and (4) haptic feedback only. Participants (n=26) were instructed to perform a brisk movement with their right index finger to touch either the virtual or real object. A head-mounted display (Meta Quest Pro headset, Meta) was used to generate an immersive virtual environment (controlled by a custom-made Unity platform, Unity Technologies) to present the visual conditions, showing a cube and a stylus, which were calibrated and co-located to correspond to a real physical cube and the participants’ index finger, positioned at reachable distance on a table in front of them. A Phantom Touch X robot (3D systems) was used to provide force-feedback haptics.

To assess CSE, we applied single pulse transcranial magnetic stimulation (TMS) using a figure-of-eight coil positioned over the left primary motor cortex. TMS was triggered at the time point the stylus contacted the virtual object. We measured the peak-to-peak amplitude of motor-evoked potentials (MEP) induced in the right first dorsal interosseous (FDI) muscle and the root-mean-square (RMS) electromyographic activity in the same muscle over a period covering 200 ms prior to TMS. Statistical analyses were conducted using non-parametric Friedman’s ANOVAs, with further post hoc Bonferroni-corrected pairwise comparisons. These experiments were approved by King’s College London ethical committee.

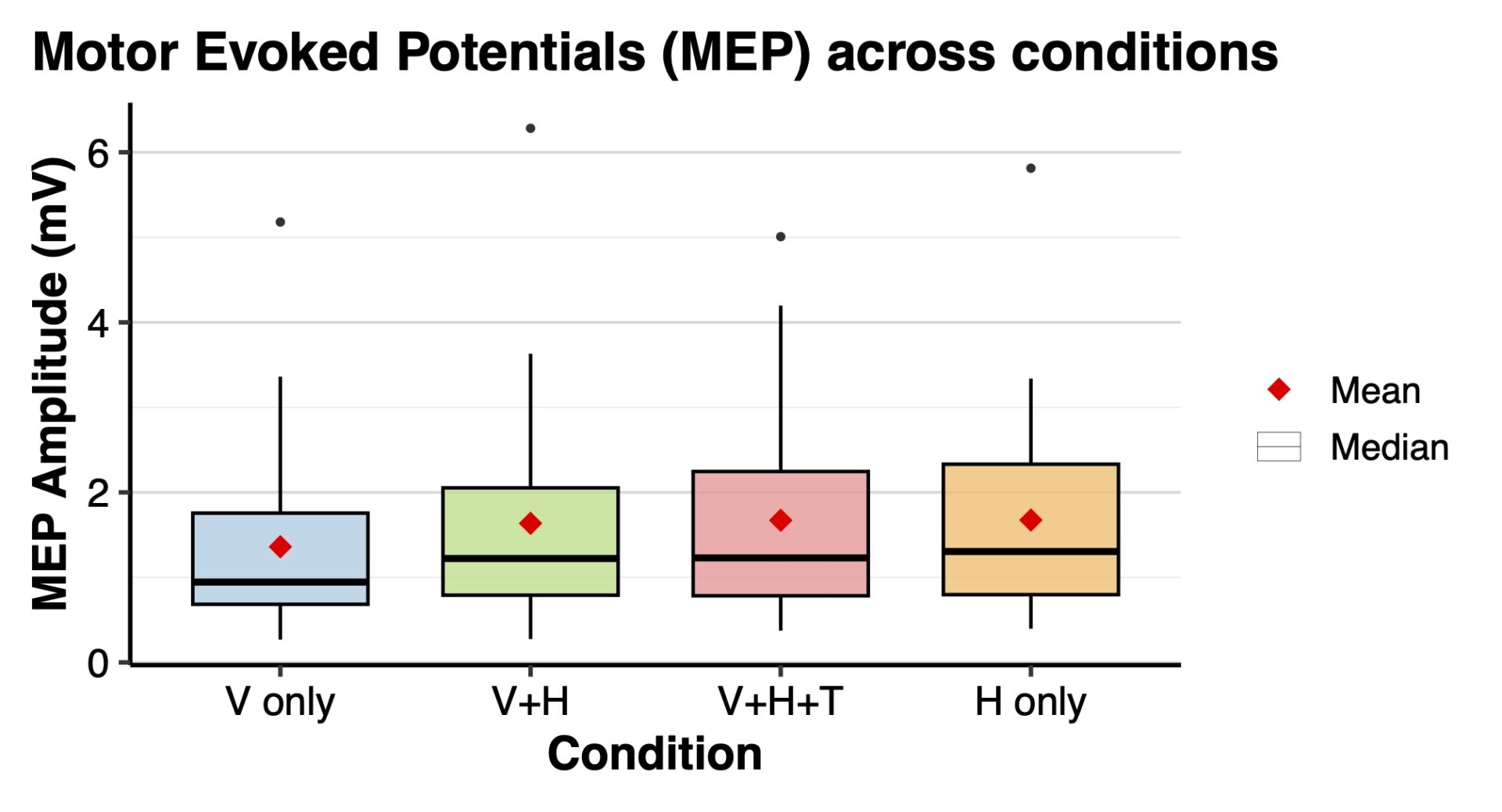

We found a significant main effect of sensory feedback on MEP amplitudes (chi2(3)=22.48, p<0.001), suggesting that CSE is differentially modulated depending on the sensory modalities involved in object contact. Post hoc analyses revealed that the ‘vision only’ condition elicited significantly lower CSE compared to the other three conditions (all p<0.001, see figure). These results suggest that CSE is lower when hand-object contact is perceived exclusively through visual input, compared to conditions where any type of haptic feedback is present, irrespective of whether it is combined with visual information. We found no effect of sensory modality on muscle activity RMS (chi2(3)=4.02, p=0.259), ruling out any low-level effect of background muscle activity on CSE.

We conclude that the presence of haptics in VR settings is a key driver of motor cortical activity, an important factor to consider for VR protocols aimed at promoting neuroplasticity.