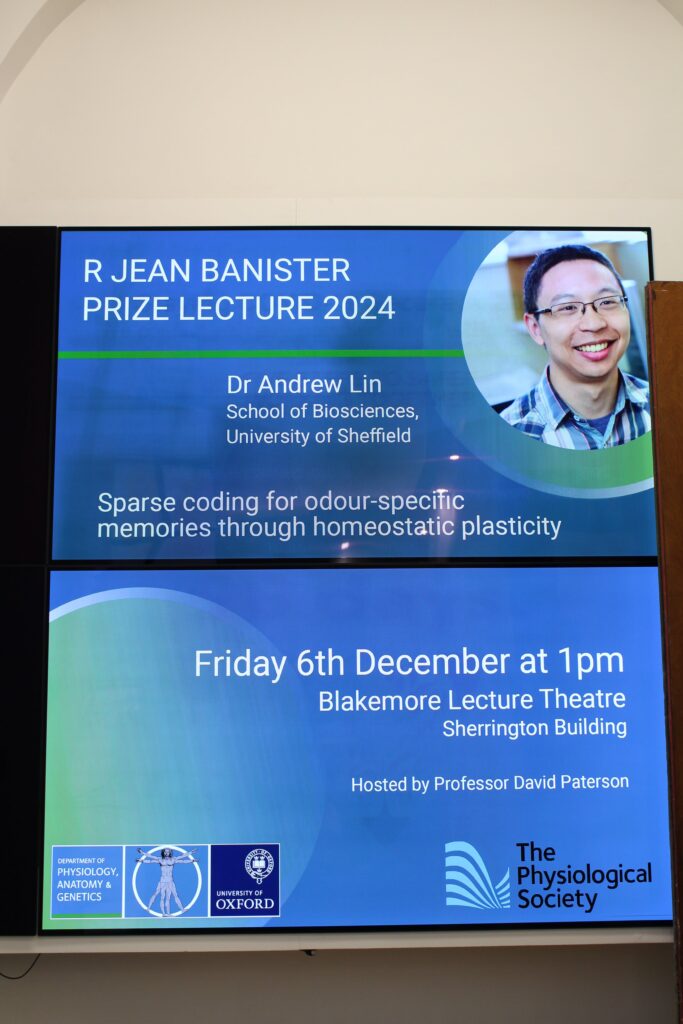

Prize Lecture: Sparse coding for odour-specific memories through homeostatic plasticity

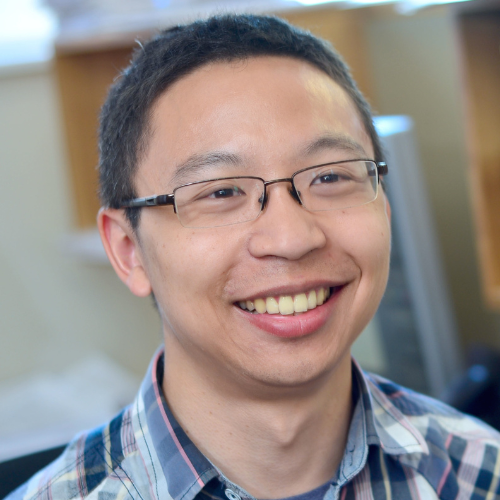

Dr Andrew Lin, University of Sheffield, UK

2024 R Jean Banister Prize Lecture recipient

In 2024, Andrew Lin captivated audiences explaining how sensory circuits develop and maintain optimal coding strategies for storing stimulus-specific associative memories. As the recipient of The Society’s R Jean Banister Prize Lecture, he presented his series titled ‘Sparse coding for odour-specific memories through homeostatic plasticity’, delivering his last lecture in December 2024 at University of Oxford. In this article, Andrew shares his inspiration for studying the olfactory system of the fruit fly and how this research could build better artificial intelligence systems and advance treatments for neurological disorders.

“It is a tremendous honour to receive the 2024 R Jean Banister Prize Lecture, and I’m very grateful to The Physiological Society for the recognition and for the opportunity to present my lab’s research to colleagues around the country.”

It is a tremendous honour to receive the 2024 R Jean Banister Prize Lecture, and I’m very grateful to The Physiological Society for the recognition and for the opportunity to present my lab’s research to colleagues around the country.

I’ve been interested in understanding how the brain works since I was a kid and first encountered the idea that all my thoughts, feelings, perceptions and memories are nothing more than my brain cells firing. As an undergraduate, I became interested in development and neuronal plasticity, particularly in the idea of “self-assembly”, that the brain wires itself up and modifies itself during learning using basic molecular and synaptic rules. My first major research project was on olfactory associative learning in the fruit fly Drosophila, in my undergraduate final-year thesis with Professor Sam Kunes at Harvard University. I went on to study axon guidance during my PhD with Professor Christine Holt at University of Cambridge, where I studied local protein synthesis in the axonal growth cones of retinal ganglion cells.

Toward the end of my PhD, I started to become more interested in systems neuroscience, particularly in how neural circuits carry out useful computations to control behaviour. At the same time, recent technical advances had made it more feasible to record and manipulate activity of specific neurons in flies. So, for my postdoc, I returned to my earlier interest in learning and memory in Drosophila, now using these tools to link behaviour and neural circuit physiology in exciting new ways. Working with Gero Miesenböck at University of Oxford, I showed that sparse activity in memory-encoding neurons allows flies to learn to discriminate similar stimuli.

Circuitry, learning and memory – how does it all work?

In my own lab at University of Sheffield, we try both to understand the mechanisms and rules behind neuronal plasticity and learning, and to understand the logic behind these rules: why is the brain the way it is? That is, to what extent are neural circuits and synaptic plasticity rules “optimal” rather than being evolutionary accidents? We address this question in the fly olfactory system by combining experiments testing how neural circuits work, with computational work testing models for how these circuits could work and how well these theoretical alternatives perform.

These questions formed the basis of my R Jean Banister Lecture, where I discussed our recent findings linking neuronal plasticity with computational function. We’ve been particularly interested in homeostatic plasticity, the process by which the brain stabilises its activity by compensating for perturbations. We developed the fly’s olfactory memory centre into a new model for studying homeostatic plasticity in vivo, and found that the circuit can homeostatically compensate for excess excitation or excess inhibition.

The rules of biological wiring and computation can inform new treatments

While homeostatic plasticity is normally seen as primarily stabilising the brain, we found that it also improves learning: in our models, circuits learn more effectively when the individual neurons in the population use homeostasis to all have the same average activity levels. Applying this “equalisation” method to machine learning algorithms improved their performance, showing how understanding biological brains can inspire better artificial intelligence.

We’re further exploring to what extent the fly brain’s homeostatic plasticity matches what our models suggest is computationally optimal. We’re also starting to test whether the particular synaptic plasticity rules used for learning in flies have computational advantages compared to alternative rules. We hope our work will not only help us understand how the brain works, but also improve artificial brains and help treat neurological disorders.

Throughout all of this work, I’ve been grateful to my mentors and peers for their support and guidance, and to all the talented students and postdocs in my lab, past and present, whose creativity and dedication have made this journey so much fun. I’m looking forward to all the future discoveries we will make together.

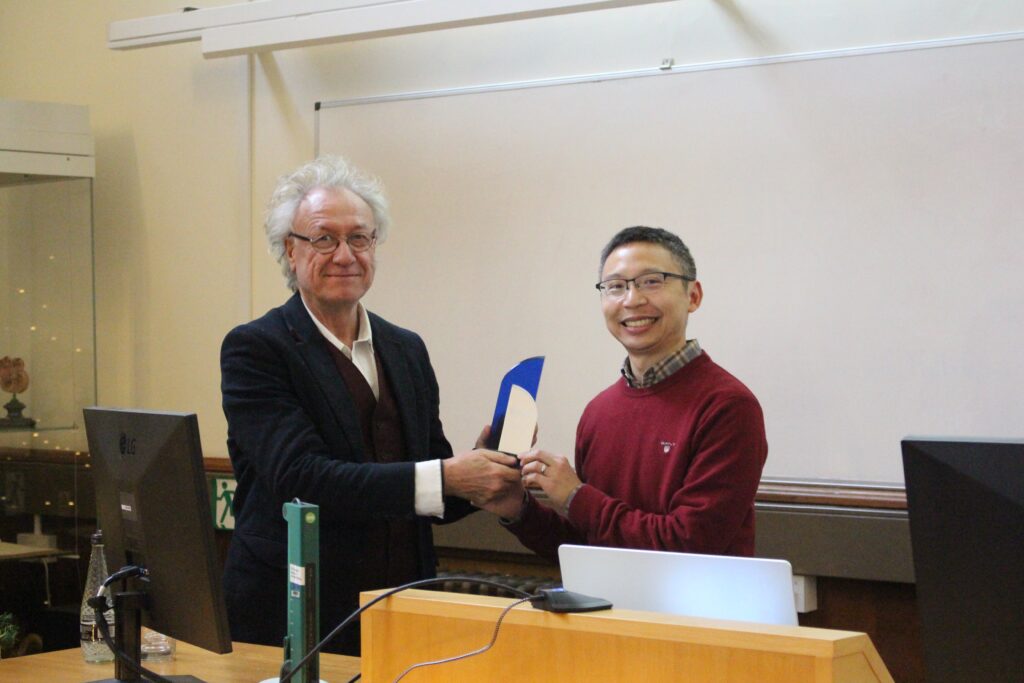

Professor David Paterson, Past-President of The Physiological Society, presenting the Prize Lecture award to Dr Andrew Lin.